A new week of blogs about our development in MySQL Cluster 7.6.

After working a long time on a set of new developments, there is a lot

of things to describe. I will continue this week with discussing sharding

and NDB, a new cloud feature in 7.6 and provide some benchmark

results on restart performance in 7.6 compared to 7.5. I am also planning

a comparative analysis for a few more versions of NDB.

In the blog serie I have presented recently we have displayed

the performance impact of various new features in MySQL Cluster

7.5 and 7.6. All these benchmarks were executed with tables that

used 1 partition. The idea behind this is that to develop a

scalable application it is important to develop partition-aware

applications.

A partition-aware application will ensure that all partitions

except one is pruned away from the query. Thus they get the same

performance as a query on a single-partition table.

Now in this blog we analyse the difference on using 1 partition

per table and using 8 partitions per table.

The execution difference is that with 8 partitions we have to

dive into the tree 8 times instead of one time and we have to

take the startup cost of the scan as well. At the same time

using 8 partitions means that we get some amount of parallelism

in the query execution and this speeds up query execution during

low concurrency.

Thus there are two main difference with single-partition scans

and multi-partition scans.

The first difference is that the parallelism decreases the latency

of query execution at low concurrency. More partitions means a higher

speedup.

The second difference is that the data node will spend more CPU to

execute the query for multi-partition scans compared to single-partition

scans.

Most of the benchmarks I have shown are limited by the cluster connection

used. Thus we haven't focused so much on the CPU usage in data nodes.

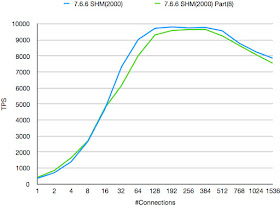

Thus in the graph above the improvement of query speed is around 20% at

low concurrency. The performance difference for other concurrency levels

is small, the multi-partition scans uses more CPU. The multi-partition

scans is though a bit more variable in its throughput.

Tests where I focused more on data node performance showed around 10%

overhead for multi-partition scans compared to single-partition scans

in a similar setup.

An interesting observation is that although most of the applications

should be developed with partition-aware queries, those queries that

are not pruned to one partition will be automatically parallelised.

This is the advantage of the MySQL Cluster auto-sharded architecture.

In a sharded setup using any other DBMS it is necessary to ensure that

all queries are performed in only one shard since there are no automatic

queries over many shards. This means that partition-aware queries will

be ok to handle in only one data server, but the application will have to

calculate where this data server resides. Cross-shard queries have to be

automatically managed though, both sending queries in parallel to

many shards and merging the results from many shards.

With NDB all of this is automatic. If the query is partition-aware,

it will be automatically directed to the correct shard (node group

in NDB). If the query isn't partition-aware and thus a cross-shard

query, it is automatically parallelised. It is even possible to

push join queries down into the NDB data nodes to execute the

join queries using a parallel linked-join algorithm.

As we have shown in earlier blogs and will show even more in coming

blogs NDB using the Read Backup feature will ensure that read queries

are directed to a data node that is as local as possible to the MySQL

Server executing the query. This is true also for join queries being pushed

down to the NDB data nodes.

No comments:

Post a Comment