In previous blogs we have shown how MySQL Cluster can use the Read Backup

feature to improve performance when the MySQL Server and the NDB data

node are colocated.

There are two scenarios in a cloud setup where additional measures are

needed to ensure localized read accesses even when using the Read Backup

feature.

The first scenario is when data nodes and MySQL Servers are not colocated.

In this case by default we have no notion of closeness between nodes in

the cluster.

The second case is when we have multiple node groups and using colocated

data nodes and MySQL Server. In this case we have a notion of closeness

to the data in the node group we are colocated with, but not to other

node groups.

In a cloud setup the closeness is dependent on whether two nodes are in

the same availability domain (availability zone in Amazon/Google) or not.

In your own network other scenarios could exist.

In MySQL Cluster 7.6 we added a new feature where it is possible

to configure nodes to be contained in a certain location domain.

Nodes that are close to each other should be configured to be part of

the same location domain. Nodes belonging to different location domains

are always considered to be further away than the one with the same

location domain.

We will use this knowledge to always use a transaction coordinator placed

in the same location domain and if possible we will always read from a

replica placed in the same location domain as the transaction coordinator.

We use this feature to direct reads to a replica that is contained

in the same availability domain.

This provides a much better throughput for read queries in MySQL Cluster

when the data nodes and MySQL servers span multiple availability domains.

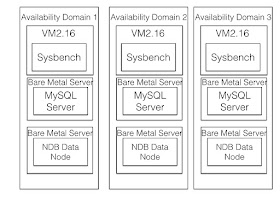

In the figure below we see the setup, each sysbench application is working

against one MySQL Server, both of these are located in the same availability

domain. The MySQL Server works against a set of 3 replicas in the NDB data

nodes. Each of those 3 replicas reside in a different availabilty domain.

The graph above shows the difference between using location domain ids in

this setup compared to not using them. The lacking measurements is missing

simply because there wasn't enough time to complete this particular

benchmark, but the measurements show still the improvements possible and

the improvement is above 40%.

The Bare Metal Server used for data nodes was the DenseIO2 machines and

the MySQL Server used a bare metal server without any attached disks and

not even any block storage is needed in the MySQL Server instances. The

MySQL Servers in an NDB setup are more or stateless, all the required state

is available in the NDB data nodes. Thus it is quite ok to start up a MySQL

Server from scratch all the time. The exception is when the MySQL Server

is used for replicating to another cluster, in this case the binlog state is required

to be persistent on the MySQL Server.

No comments:

Post a Comment